This yields a list of errors squared, which is then summed and equals the unexplained variance. Research what others have done and incorporate those findings into constructing your model. Before beginning the regression analysis, develop an idea of what the important variables are along with their relationships, coefficient signs, and effect magnitudes.

R-squared (R2) is a statistical measure that represents the proportion of the variance for a dependent variable that’s explained by an independent variable or variables in a regression model. Whereas correlation explains the strength of the relationship between an independent and dependent variable, R-squared explains to what extent the variance of one variable explains the variance of the second variable. So, if the R2of a model is 0.50, then approximately half of the observed variation can be explained by the model’s inputs. The R-squared of the regression is the fraction of the variation in your dependent variable that is accounted for (or predicted by) your independent variables.

For example, relationship between rash driving and number of road accidents by a driver is best studied through regression. You’re probably familiar with plotting line graphs with one X axis and one Y axis. The X variable is sometimes called the independent variable and the Y variable is called the dependent variable.

For example, you might guess that there’s a connection between how much you eat and how much you weigh; regression analysis can help you quantify that. Regression analysis will provide you with an equation for a graph so that you can make predictions about your data. For example, if you’ve been putting on weight over the last few years, it can predict how much you’ll weigh in ten years time if you continue to put on weight at the same rate.

Statistics How To

In 1846, the French physicist Auguste Bravais (1811–1863) first developed what would become the correlation coefficient. After examining forearm and height measurements, Galton independently rediscovered the concept of correlation in 1888 and demonstrated its application in the study of heredity, anthropology, and psychology.

The most common form of regression analysis is linear regression, in which a researcher finds the line (or a more complex linear combination) that most closely fits the data according to a specific mathematical criterion. For example, the method of ordinary least squares computes the unique line (or hyperplane) that minimizes the sum of squared distances between the true data and that line (or hyperplane). Regression analysis generates an equation to describe the statistical relationship between one or more predictor variables and the response variable.

The latter is especially important when researchers hope to estimate causal relationships using observational data. Regression analysis is a form of predictive modelling technique which investigates the relationship between a dependent(target) and independent variable (s) (predictor). This technique is used for forecasting, time series modelling and finding the causal effect relationship between the variables.

The adjusted R-squared compares the descriptive power of regression models that include diverse numbers of predictors. Every predictor added to a model increases R-squared and never decreases it. In anoverfittingcondition, an incorrectly high value of R-squared is obtained, even when the model actually has a decreased ability to predict. This includes taking the data points (observations) of dependent and independent variables and finding the line of best fit, often from a regression model. From there you would calculate predicted values, subtract actual values and square the results.

Regression analysis provides detailed insight that can be applied to further improve products and services.

However, many people just call them the independent and dependent variables. More advanced regression techniques (like multiple regression) use multiple independent variables. A line chart isn’t always helpful in a real-world regression analysis.

What is regression analysis example?

Regression analysis is a powerful statistical method that allows you to examine the relationship between two or more variables of interest. While there are many types of regression analysis, at their core they all examine the influence of one or more independent variables on a dependent variable.

Chart the Data

It will also give you a slew of statistics (including a p-value and a correlation coefficient) to tell you how accurate your model is. Most elementary stats courses cover very basic techniques, like making scatter plots and performing linear regression. However, you may come across more advanced techniques like multiple regression.

- The most common form of regression analysis is linear regression, in which a researcher finds the line (or a more complex linear combination) that most closely fits the data according to a specific mathematical criterion.

- Regression analysis generates an equation to describe the statistical relationship between one or more predictor variables and the response variable.

What does regression analysis mean?

Regression analysis is a method of determining the relationship between two sets of variables when one set is dependent on the other. In business, regression analysis can be used to calculate how effective advertising has been on sales or how production is affected by the number of employees working in a plant.

The multivariate probit model is a standard method of estimating a joint relationship between several binary dependent variables and some independent variables. For categorical variables with more than two values there is the multinomial logit. For ordinal variables with more than two values, there are the ordered logit and ordered probit models. Censored regression models may be used when the dependent variable is only sometimes observed, and Heckman correction type models may be used when the sample is not randomly selected from the population of interest.

How does regression analysis work?

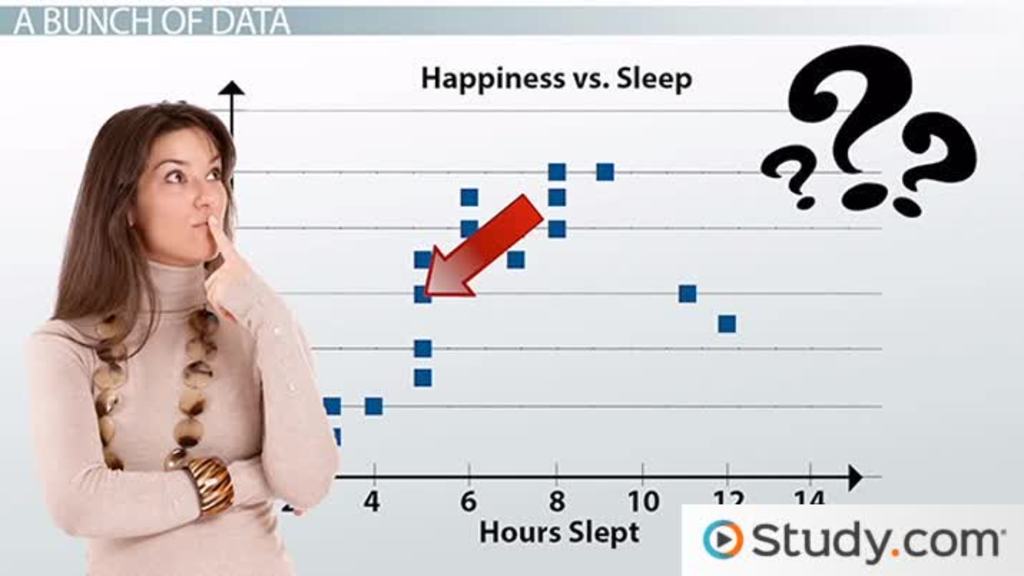

For example, correlating advertising spending with sales will seldom create a straight line, because today’s advertising may not affect sales for a day, a week or even a month later. In these cases using a scatter chart will help you see the relationship between both. Scatter charts plot each range of variables independently of the other, giving you two patterns of dots on the chart.

After you use Minitab Statistical Software to fit a regression model, and verify the fit by checking the residual plots, you’ll want to interpret the results. In this post, I’ll show you how to interpret the p-values and coefficients that appear in the output for linear regression analysis. The response variable may be non-continuous (“limited” to lie on some subset of the real line). For binary (zero or one) variables, if analysis proceeds with least-squares linear regression, the model is called the linear probability model. Nonlinear models for binary dependent variables include the probit and logit model.

When to Use Multiple Regression Analysis.

Galton’s later statistical study of the probability of extinction of surnames led to the concept of Galton–Watson stochastic processes. Galton was able to further his notion of regression by collecting and analyzing data on human stature.

An alternative to such procedures is linear regression based on polychoric correlation (or polyserial correlations) between the categorical variables. Such procedures differ in the assumptions made about the distribution of the variables in the population. If the variable is positive with low values and represents the repetition of the occurrence of an event, then count models like the Poisson regression or the negative binomial model may be used. The first step in finding a linear regression equation is to determine if there is a relationship between the two variables.

In linear regression, we predict the mean of the dependent variable for given independent variables. Since mean does not describe the whole distribution, so modeling the mean is not a full description of a relationship between dependent and independent variables. So we can use quantile regression which predicts a quantile (or percentile) for given independent variables. R-Squared only works as intended in a simple linear regression model with one explanatory variable. With a multiple regression made up of several independent variables, the R-Squared must be adjusted.

You’ll also need a list of your data in x-y format (i.e. two columns of data—independent and dependent variables). Linear regression is the most widely used statistical technique; it is a way to model a relationship between two sets of variables. The result is a linear regression equation that can be used to make predictions about data.

If both sets of dots have a similar pattern, there is a correlation between the variables. In regression with multiple independent variables, the coefficient tells you how much the dependent variable is expected to increase when that independent variable increases by one, holding all the other independent variables constant. Remember to keep in mind the units which your variables are measured in. Coming up with a prediction equation like this is only a useful exercise if the independent variables in your dataset have some correlation with your dependent variable.

Building on the results of others makes it easier both to collect the correct data and to specify the best regression model without the need for data mining. Regression analysis is primarily used for two conceptually distinct purposes. First, regression analysis is widely used for prediction and forecasting, where its use has substantial overlap with the field of machine learning. Second, in some situations regression analysis can be used to infer causal relationships between the independent and dependent variables. Importantly, regressions by themselves only reveal relationships between a dependent variable and a collection of independent variables in a fixed dataset.

The P value tells you how confident you can be that each individual variable has some correlation with the dependent variable, which is the important thing. Correlation and Regression are the two analysis based on multivariate distribution. A multivariate distribution is described as a distribution of multiple variables. Correlation is described as the analysis which lets us know the association or the absence of the relationship between two variables ‘x’ and ‘y’. On the other end, Regression analysis, predicts the value of the dependent variable based on the known value of the independent variable, assuming that average mathematical relationship between two or more variables.

Simple linear regression plots one independent variable X against one dependent variable Y. Technically, in regression analysis, the independent variable is usually called the predictor variable and the dependent variable is called the criterion variable.